Neuroscientist and philosopher Sam Harris describes a scenario that is both terrifying and likely to occur. It’s not, he says, a good combination.

I’m going to describe how the gains we make in artificial intelligence could ultimately destroy us. And, in fact, I think it’s very difficult to see how they won’t destroy us or inspire us to destroy ourselves. And yet if you’re anything like me, you’ll find that it’s fun to think about these things. And that response is part of the problem.

It’s as though we stand before two doors

One of the things that worries me most about the development of AI at this point is that we seem unable to marshal an appropriate emotional response to the dangers that lie ahead.

Behind door number one …

Given how valuable intelligence and automation are, we will continue to improve our technology if we are at all able to. What could stop us from doing this? A full-scale nuclear war? A global pandemic? An asteroid impact? Justin Bieber becoming president of the United States? The point is, something would have to destroy civilization as we know it. You have to imagine how bad it would have to be to prevent us from making improvements in our technology permanently, generation after generation.

And behind door number two?

The only alternative is that we continue to improve our intelligent machines year after year after year. At a certain point, we will build machines that are smarter than we are, and once we have machines that are smarter than we are, they will begin to improve themselves. And then we risk what the mathematician IJ Good called an “intelligence explosion,” that the process could get away from us. It’s not that our machines will become spontaneously malevolent. The concern is really that we will build machines that are so much more competent than we are, that the slightest divergence between their goals and our own could destroy us.

21st century insects

Just think about how we relate to ants. We don’t hate them. We don’t go out of our way to harm them. In fact, sometimes we take pains not to harm them. We step over them on the sidewalk. But whenever their presence seriously conflicts with one of our goals, let’s say when constructing a building, we annihilate them without a qualm. The concern is that we will one day build machines that, whether they’re conscious or not, could treat us with similar disregard.

Deep thinking, deep impact

Intelligence is a matter of information processing in physical systems. We have already built narrow intelligence into our machines, and many of these machines perform at a level of superhuman intelligence already. Intelligence is either the source of everything we value or we need it to safeguard everything we value. It is our most valuable resource. So, we want to do this. We have problems that we desperately need to solve. We want to cure diseases like Alzheimer’s and cancer. We want to understand economic systems. We want to improve our climate science. So we will do this, if we can. The train is already out of the station, and there’s no brake to pull.

Where do we stand?

We don’t stand on a peak of intelligence, or anywhere near it, likely. This really is the crucial insight. This is what makes our situation so precarious, and this is what makes our intuitions about risk so unreliable. It seems overwhelmingly likely that the spectrum of intelligence extends much further than we currently conceive, and if we build machines that are more intelligent than we are, they will very likely explore this spectrum in ways that we can’t imagine, and exceed us in ways that we can’t imagine.

Warp speed intelligence

Imagine if we built a superintelligent AI that was no smarter than your average team of researchers at Stanford or MIT. Well, electronic circuits function about a million times faster than biochemical ones, so this machine should think about a million times faster than the minds that built it. So you set it running for a week, and it will perform 20,000 years of human-level intellectual work, week after week after week. How could we even understand, much less constrain, a mind making this sort of progress?

Best case scenario?

The other thing that’s worrying, frankly, is that, imagine the best case scenario. We hit upon a design of superintelligent AI that has no safety concerns. We have the perfect design the first time around. It’s as though we’ve been handed an oracle that behaves exactly as intended. Well, this machine would be the perfect labor-saving device. So, we’re talking about the end of human drudgery. We’re also talking about the end of most intellectual work. Now, that might sound pretty good, but ask yourself what would happen under our current economic and political order? It seems likely that we would witness a level of wealth inequality and unemployment that we have never seen before. Absent a willingness to immediately put this new wealth to the service of all humanity, a few trillionaires could grace the covers of our business magazines while the rest of the world would be free to starve.

The next big arms race

What would the Russians or the Chinese do if they heard that some company in Silicon Valley was about to deploy a superintelligent AI? This machine would be capable of waging war, whether terrestrial or cyber, with unprecedented power. This is a winner-take-all scenario. To be six months ahead of the competition here is to be 500,000 years ahead, at a minimum. So it seems that even mere rumors of this kind of breakthrough could cause our species to go berserk.

“Don’t worry your pretty little head about it.”

At this moment, one of the most frightening things is the kinds of things that AI researchers say when they want to be reassuring. And the most common reason we’re told not to worry is time. This is all a long way off, don’t you know? This is probably 50 or 100 years away. No one seems to notice that referencing the time horizon is a total non sequitur. If intelligence is just a matter of information processing, and we continue to improve our machines, we will produce some form of superintelligence. And we have no idea how long it will take us to create the conditions to do that safely. And if you haven’t noticed, 50 years is not what it used to be. 50 years is not that much time to meet one of the greatest challenges our species will ever face.

Direct-to-brain technology

Another reason we’re told not to worry is that these machines can’t help but share our values because they will be literally extensions of ourselves. They’ll be grafted onto our brains, and we’ll essentially become their limbic systems. Take a moment to consider that the safest and only prudent path forward, recommended, is to implant this technology directly into our brains. Now, this may in fact be the safest and only prudent path forward, but usually one’s safety concerns about a technology have to be pretty much worked out before you stick it inside your head.

We must consider these scenarios, and act

I don’t have a solution to this problem, apart from recommending that more of us think about it. I think we need something like a Manhattan Project on the topic of artificial intelligence. Not to build it, because I think we’ll inevitably do that, but to understand how to avoid an arms race and to build it in a way that is aligned with our interests. When you’re talking about superintelligent AI that can make changes to itself, it seems that we only have one chance to get the initial conditions right, and even then we will need to absorb the economic and political consequences of getting them right. We have to admit that we are in the process of building some sort of god. Now would be a good time to make sure it’s a god we can live with.

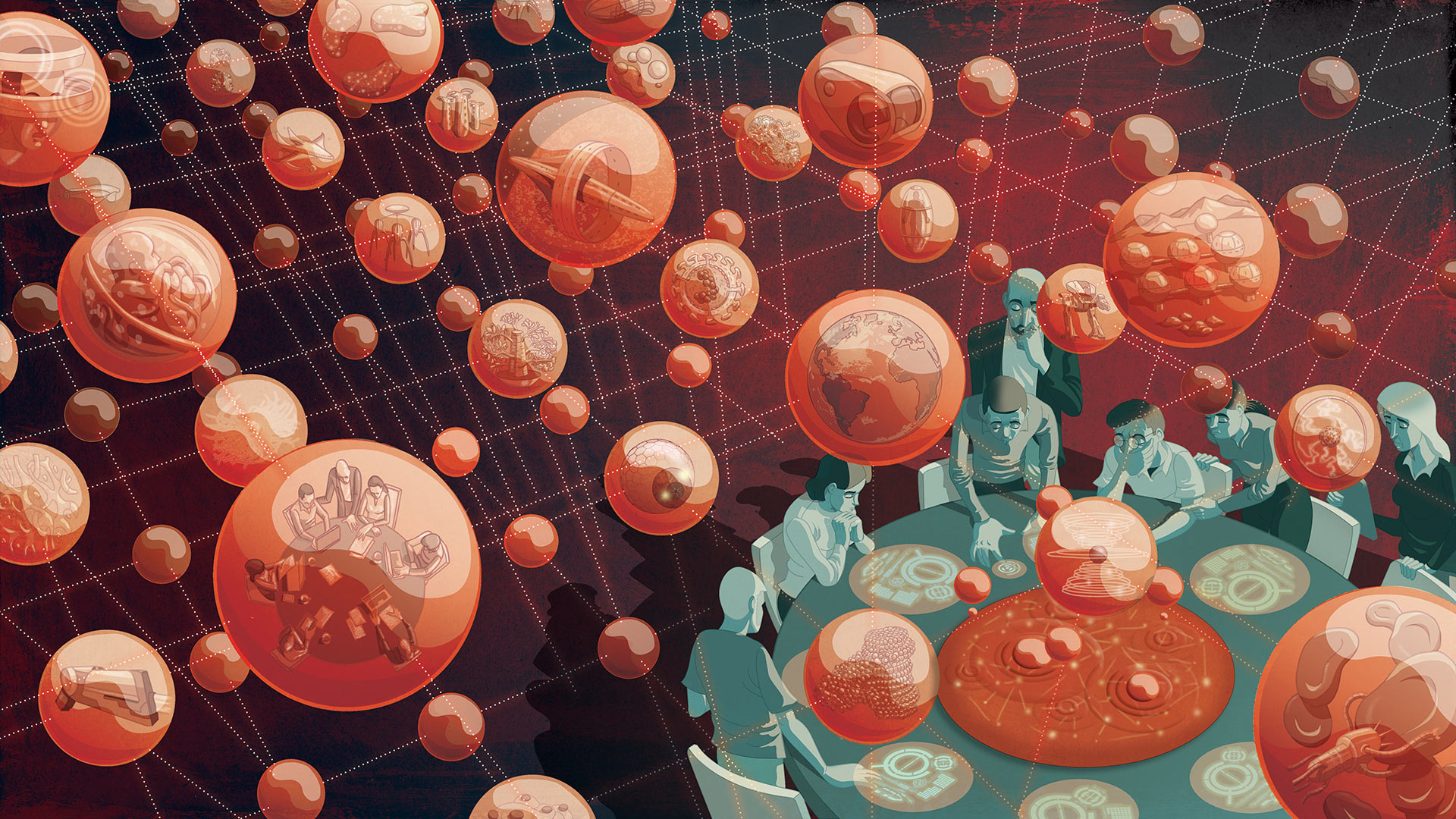

Illustrations by Paul Lachine.