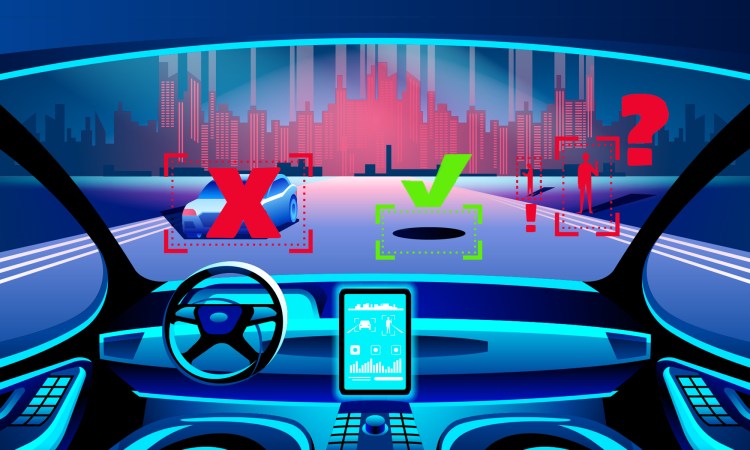

We’ve been told that AI-driven cars would soon be all over our roads, but where are they? Writer Janelle Shane explains how our world with all its unpredictable challenges — things like pedestrians, sinkholes and kangaroos — are testing the capabilities of the most advanced artificial intelligence.

Car manufacturers know: There’s a huge amount of interest in AI-driven cars. Many people would love to automate the task of driving, because they find it tedious or at times impossible. A competent AI driver would have lightning-fast reflexes, would never weave or drift in its lane, and would never drive aggressively. An AI driver would never get tired and could take the wheel for endless hours while we humans nap or party.

While AI does need huge volumes of data to program and guide it, that shouldn’t be a problem. We can accumulate lots of example data by paying human drivers to drive around for millions of miles. And we can easily build virtual driving simulations so that the AI can test and refine its strategies in sped-up time.

The memory requirements for driving are modest, too. This moment’s steering and velocity don’t depend on things that happened five minutes ago. Navigation takes care of planning for the future. Road hazards like pedestrians and wildlife come and go in a matter of seconds. Finally, controlling a self-driving car is so difficult that we don’t have other good solutions — AI is the solution that’s gotten us the furthest so far.

Yet it’s an open question whether driving is a narrow enough problem to be solved with today’s AI or whether it will require something more. So far, AI-driven cars have proved themselves able to drive millions of miles on their own, and some companies report that a human has been needed to intervene on test drives only once every few thousand or so miles. But it’s that rare need for intervention, however, that is proving tough to eliminate fully.

Humans have needed to rescue the AIs of self-driving cars from a variety of situations. Usually companies don’t disclose the reasons for these so-called disengagements — only the number of them — which is required by law in some places. This may be in part because the reasons can be frighteningly mundane.

In 2018 a research paper listed some of them. Among other things, the cars in question:

• Saw overhanging branches as an obstacle;

• Got confused about which lane another car was in;

• Decided that the intersection had too many pedestrians for it to handle;

• Didn’t see a car exiting a parking garage, and

• Didn’t see a car that pulled out in front of it.

A fatal accident in March of 2018 was the result of a situation like this. A self-driving car’s AI had trouble identifying a pedestrian, classifying her first as an unknown object, then as a bicycle, and finally, with only 1.3 seconds left for braking, as a pedestrian. The problem was further confounded by the fact that the car’s emergency braking systems were disabled in favor of alerting the car’s backup driver, yet the system was not designed to actually alert the backup driver. The backup driver had also spent many, many hours riding with no intervention needed, a situation that would make the vast majority of us less than alert.

Another fatal accident also occurred due to an obstacle-identification error. In that 2016 case, a driver used Tesla’s autopilot feature on city streets, instead of the highway driving that it had been intended for. A truck crossed in front of the car, and the autopilot’s AI failed to brake because it didn’t register the truck as an obstacle that needed to be avoided. According to an analysis by Mobileye (which designed the collision-avoidance system), the system had been designed for highway driving so it had been trained only to avoid rear-end collisions. That is, it had solely been trained to recognize trucks from behind and not from the side. Tesla reported that when the AI detected the side-view of the truck, it recognized it as an overhead sign and decided it didn’t need to brake.

AI cars have encountered many other unusual situations. When Volkswagen tested its AI in Australia for the first time, they discovered it was confused by kangaroos. Apparently it had never before encountered anything that hopped.

Given the variety of things that can happen on a road — parades, escaped emus, downed electrical lines, emergency signs with unusual instructions, lava and sinkholes — it’s inevitable that something will occur that an AI never saw in training. It’s a tough problem to make an AI that can deal with something completely unexpected, that would know an escaped emu is likely to run wildly around while a sinkhole will stay put and to understand intuitively that just because lava flows and pools sort of like water does, it doesn’t mean you can drive through a puddle of it.

Car companies are trying to adapt their strategies to the inevitability of mundane glitches or freak weirdnesses of the road. They’re looking into limiting self-driving cars to closed, controlled routes (although this doesn’t necessarily solve the emu problem — those birds are wily) or having self-driving trucks caravan behind a lead human driver. Interestingly, these compromises are leading us toward solutions that look very much like mass public transportation.

Here are the different autonomy levels of self-driving cars:

0 No automation

A Model T Ford qualifies for this level. Car has fixed speed cruise control, at most. You are driving the car, end of story.

1 Driver assistance

Car has adaptive cruise control or lane-keeping technology (something that most modern cars have). Some part of you is driving.

2 Partial automation

Car can maintain distance and follow the road, but driver must be ready to take over when needed.

3 Conditional automation

Car can drive by itself in some conditions — maybe in traffic jam mode or highway mode. Driver is rarely needed but must be ready at all times to respond.

4 High automation

Car doesn’t need a driver when it’s on a controlled route; sometimes, driver can go in back and nap. On other routes, car still needs a driver.

5 Full automation

Car never needs a driver. Car might not even have wheels and pedals. Driver can go back to sleep — car has it all under control.

As of now, when a car’s AI gets confused, it disengages — it suddenly hands control back to the human behind the wheel. Automation level 3, or conditional automation, is the highest level of car autonomy commercially available today. In Tesla’s autopilot mode, for example, the car can drive for hours unguided, but a human driver can be called to take over at any moment.

The problem with this level of automation is that the human had better be behind the wheel and paying attention, not in the back seat decorating cookies. But humans are very, very bad at being alert after spending boring hours idly watching the road. Human rescue is often a decent option for bridging the gap between the AI performance we have and the performance we need, but humans are pretty bad at rescuing self-driving cars.

Which means that making self-driving cars is both a very attractive and very difficult AI problem. To get mainstream self-driving cars, we may need to make compromises (such as creating controlled routes and going no higher than automation level number 4), or we may need AI that is significantly more flexible than the AI we have now.

Excerpted from the new book You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place by Janelle Shane. Reprinted with permission from Voracious, a division of Hachette Book Group, Inc. Copyright © 2019 by Janelle Shane.

Watch Janelle Shane’s TED Talk now: