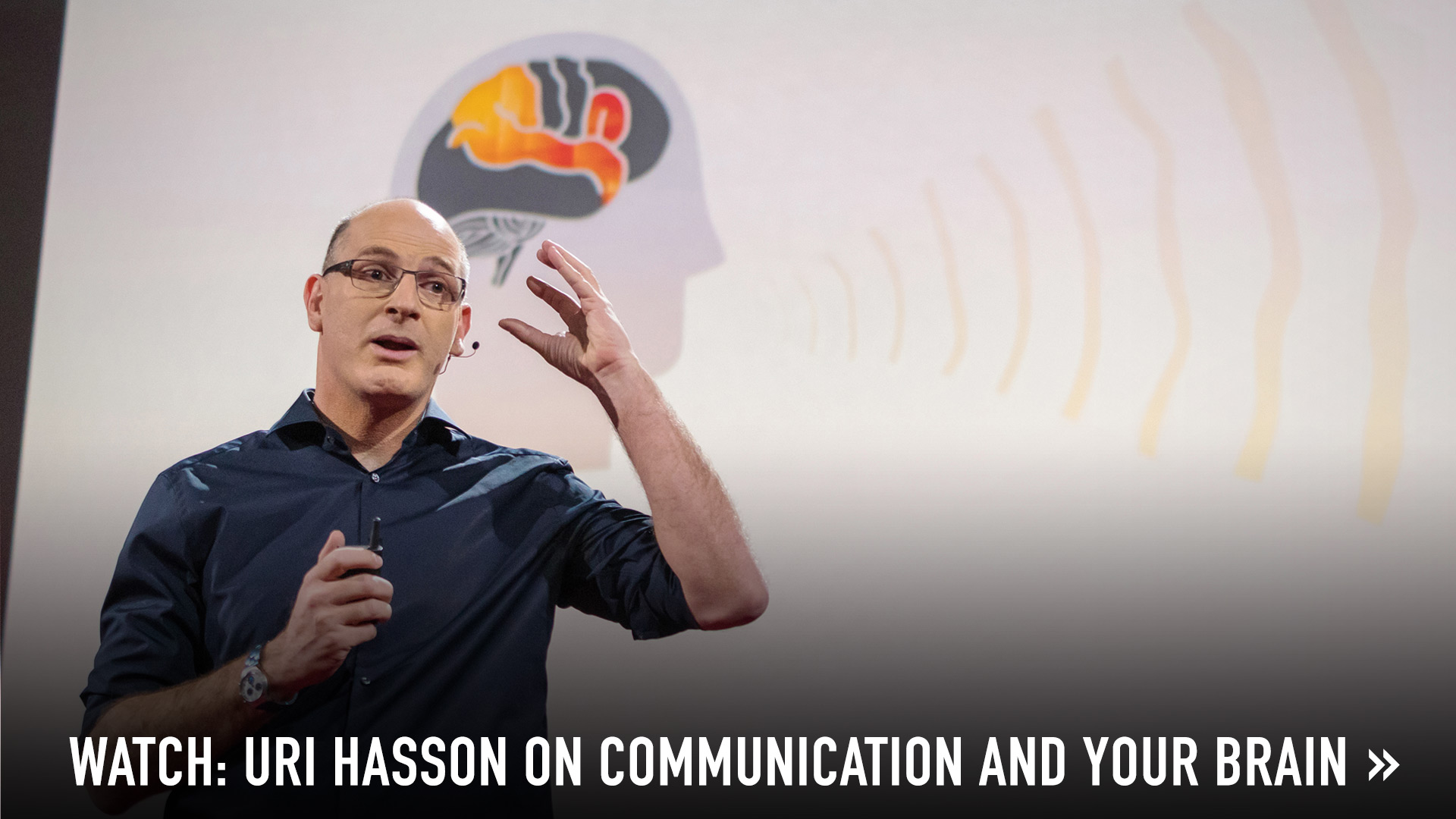

Neuroscientist Uri Hasson takes us inside his lab’s fascinating research — and our heads — to show the meeting of the minds that occurs every time we talk to each other.

Imagine that a device was invented which could record all of my memories, dreams and ideas, and then transmit the entire contents to your brain. Sounds like a game-changing invention, right? In fact, we already possess such a technology — it’s called effective storytelling. Human lives revolve around our ability to share information and experiences, and as a scientist, I’m fascinated by how our brains process interpersonal communication. Since 2008, my lab in Princeton has been focused on the question: How exactly do the neuron patterns in one person’s brain that are associated with their particular stories, memories and ideas get transmitted to another person’s brain?

Through the work in my lab, we believe we’ve uncovered two of the hidden neural mechanisms that enable us the exchange : 1) during communication, soundwaves uttered by the speaker couple the listener’s brain responses with the speaker’s brain responses; 2) our brains have developed a common neural protocol that allows us to use such brain coupling to share information. Let me take you through the research that brought us here.

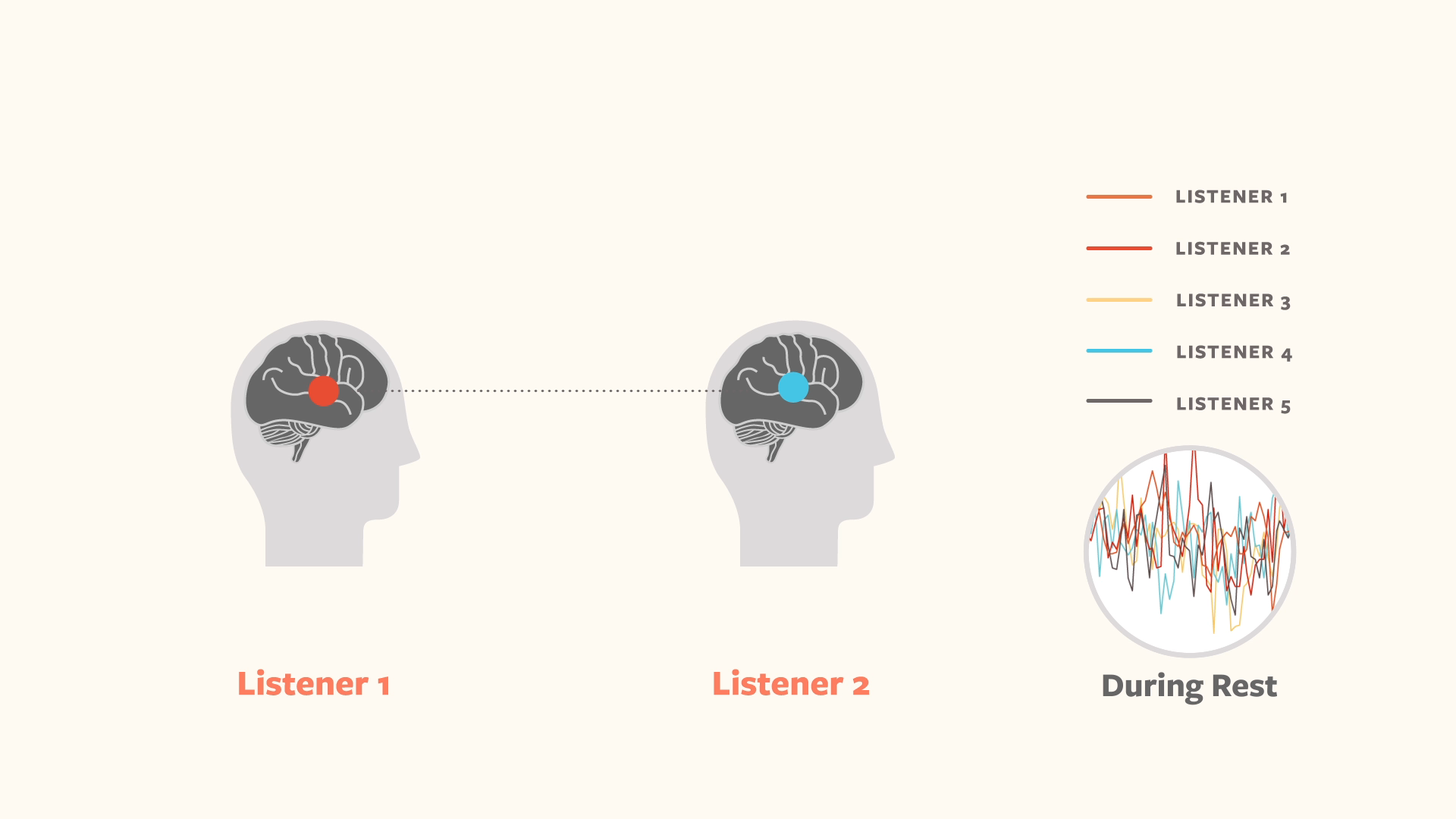

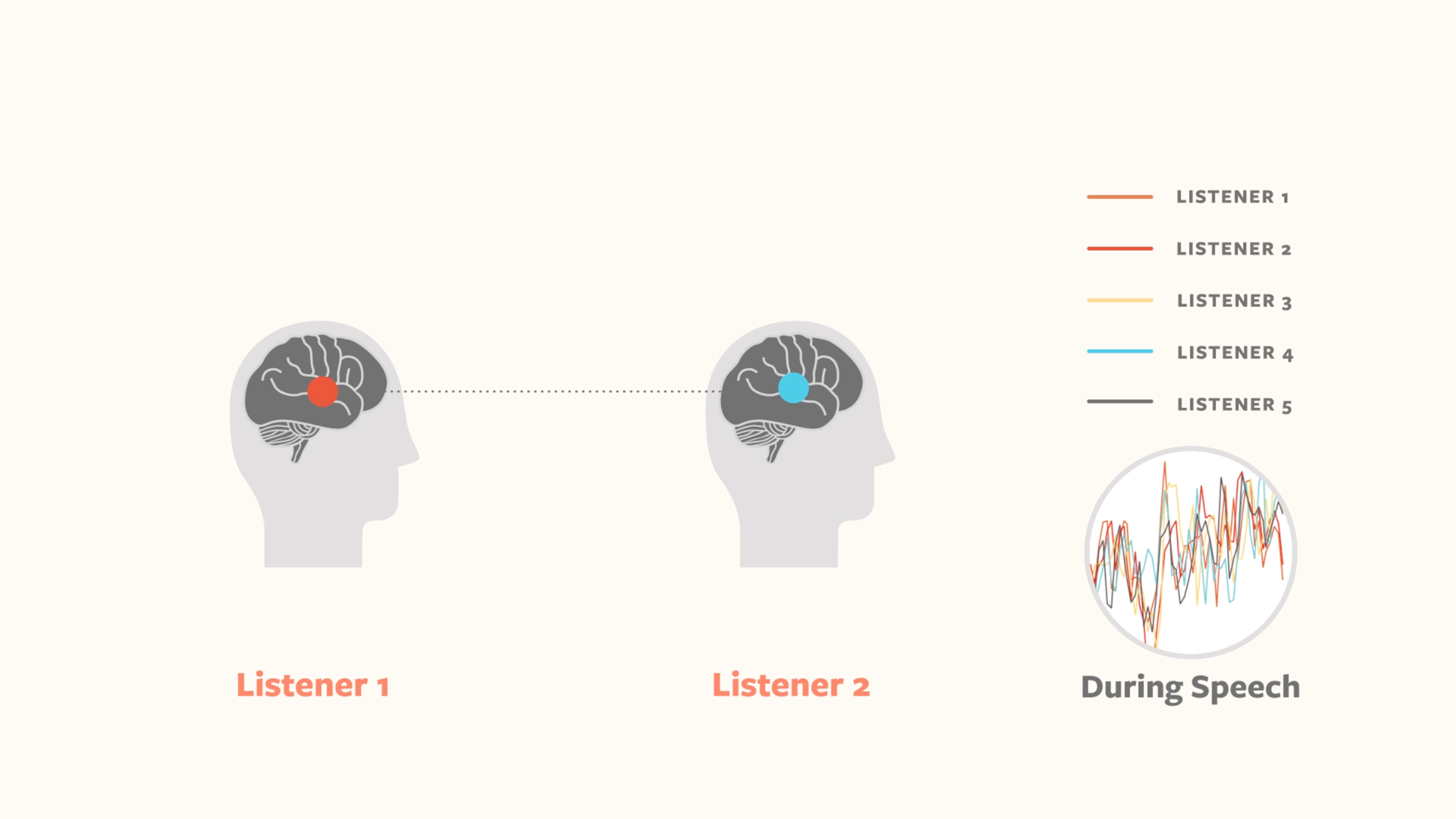

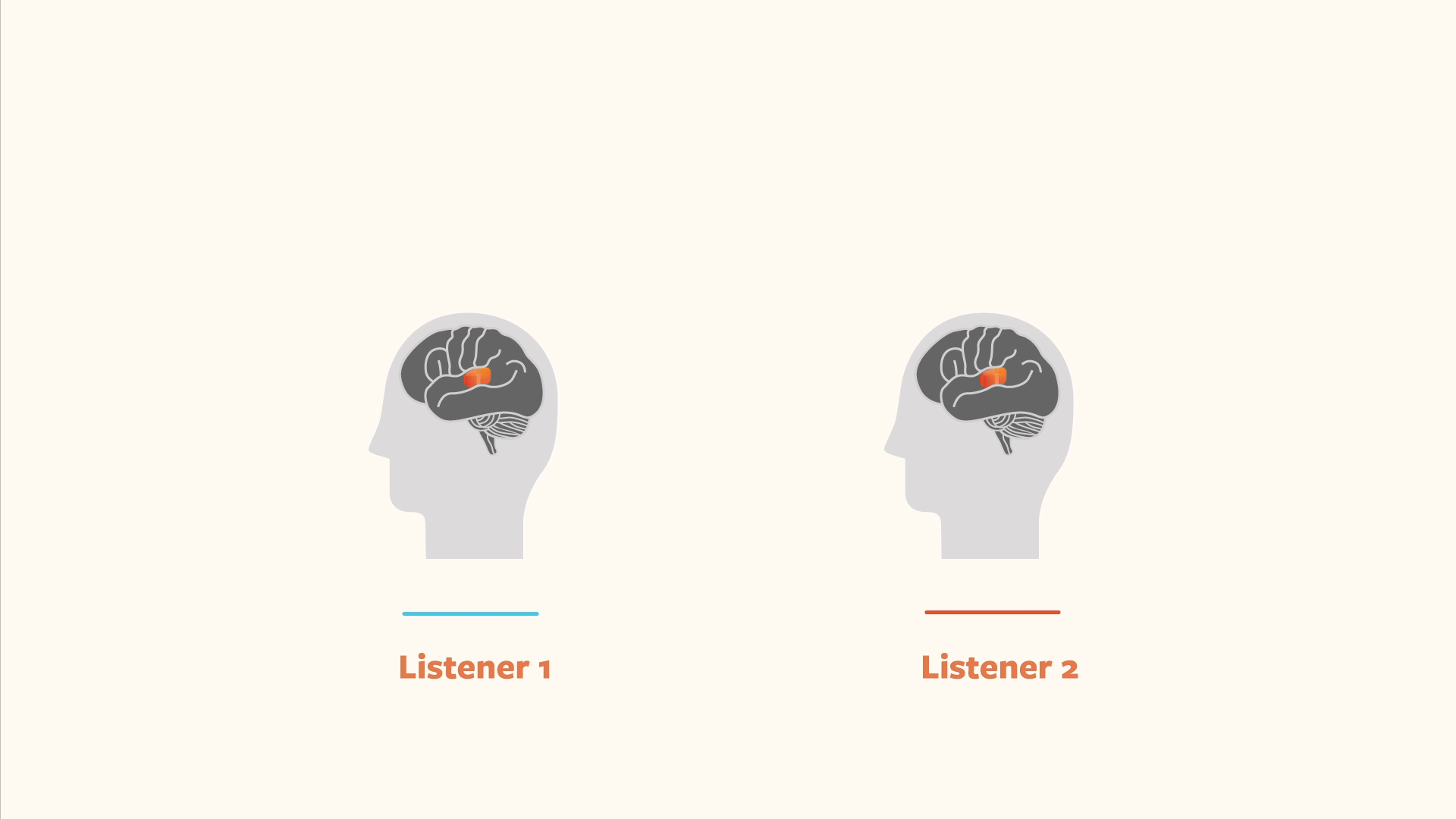

In one experiment, we brought people to the fMRI scanner and scanned their brains while they were either telling or listening to real-life stories. We started by comparing the similarity of neural responses across different listeners in their auditory cortices — the part of the brain that processes the sounds coming from the ear. When we looked at responses before the experiment started while our five listeners were at rest and waiting for the storyteller to begin, we saw the responses were very different from each other and not in sync (see the inset bubble at right).

However, immediately as the story started, we saw something amazing happen. Suddenly, we saw the neural responses in all of the subjects begin to lock together and go up and down in a similar way.

Scientists call this effect “neural entrainment,” the process by which brain responses become locked to and aligned with the sounds of speech. But what are the factors driving this neural entrainment: the sounds that the speaker produces; the words the speaker is saying; or the ideas that the speaker is trying to convey in their story?

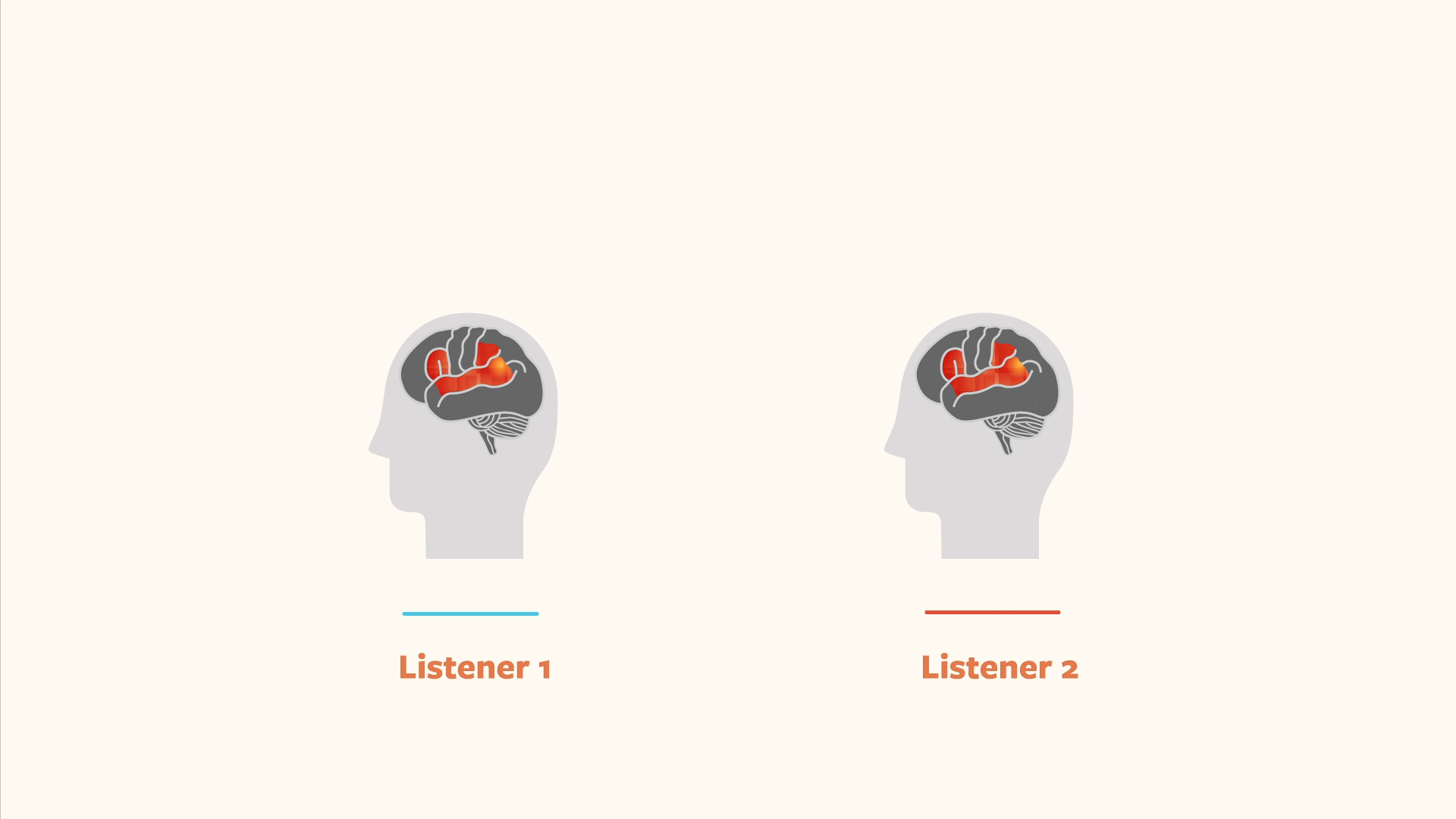

So, we experimented. First, we took the story and played it backwards. This preserved many of the original auditory features but completely removed their meaning. We found the backwards sounds induced entrainment, or alignment, of neural responses in the auditory cortices of all of the listeners but did not spread any deeper into their brains. Based on this result, we concluded the auditory cortex becomes entrained to sounds, regardless of whether the sounds convey any meaning.

Next, we scrambled the words in the story, so while each word was comprehensible, all together it sounded like a list of unconnected words. We saw the words begin to induce alignment in the early language areas of our subjects’ brains, but not beyond that.

Then, we took the words and built sentences from them. But while the sentences individually made sense, they didn’t work together to tell a story. Here’s one sample fragment that listeners heard: “And they recommend against crossing that line. He says: ‘Dear Jim, Good story. Nice details. Didn’t she only know about him through me?’”

When we played this version, we saw neural entrainment extend to all language areas that process grammatically coherent sentences. But only after we played the full, engaging story for listeners did the neural entrainment spread deeper into their brains and induce similar and aligned responses across all subjects in higher-order areas — including the frontal cortex and the parietal cortex (see below).

As a result, we concluded these high-order cortical areas become entrained to the ideas conveyed by the speaker as they construct and assemble sentences into a coherent narrative. If our conclusion were true, then we predicted if we told two different listeners the exact same story using two very different sets of words, their brain responses in these high-order areas would still be similar. To find out, we did the following experiment. We took our story, which had been told in English, and translated it into Russian.

When we played the English story to English listeners and the translated Russian story to Russian listeners, we compared their neural responses. We did not see similar responses in their auditory cortices, which was expected because the sounds in each language are very different. However, we observed the responses in their higher-order areas to be similar across the two groups of listeners. We deduced this was because they had a similar understanding of the story, and we confirmed they did by testing them after the story ended.

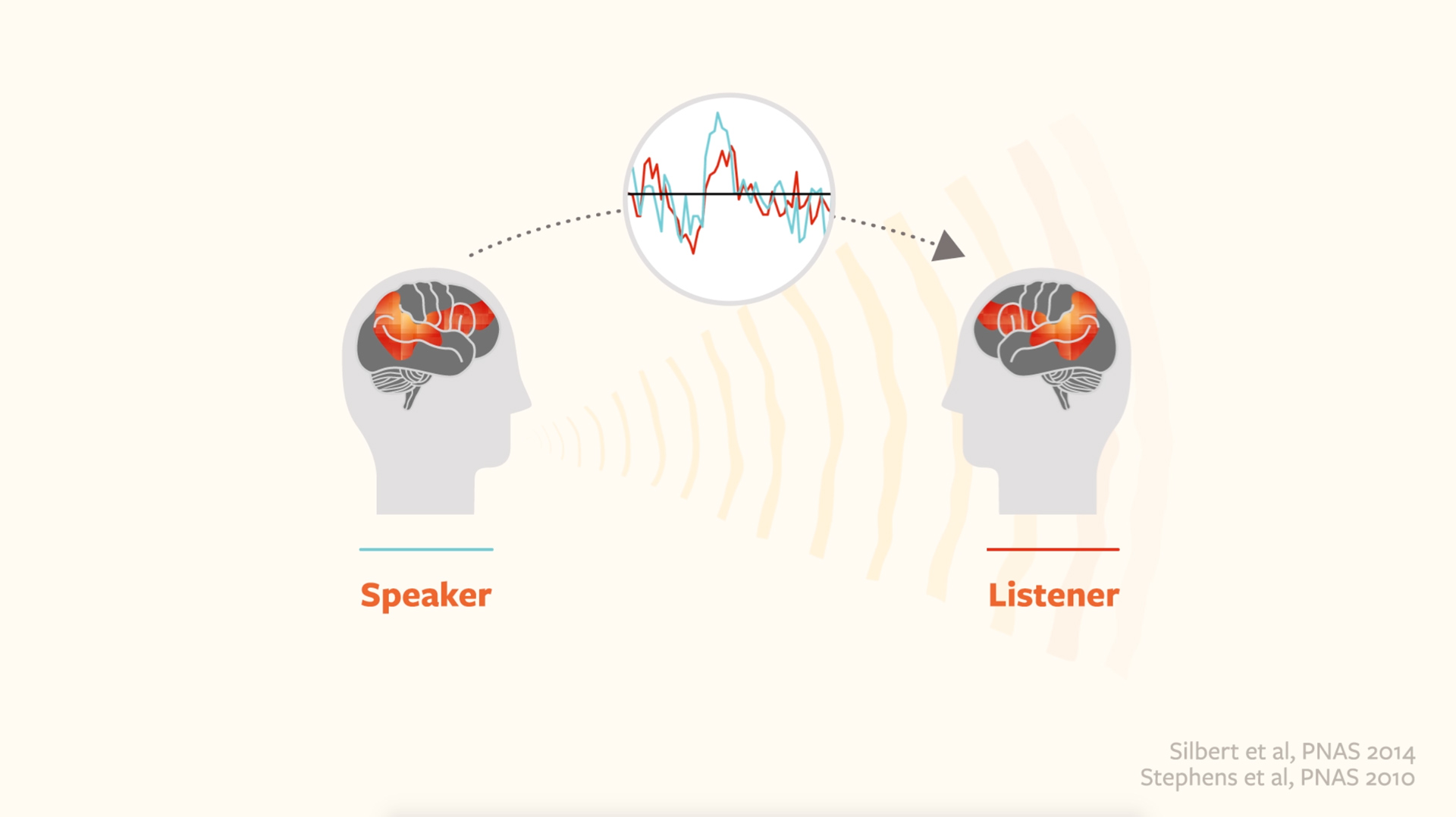

Okay, so now we had an idea of the hierarchical chain of neural processes, from sounds to words to ideas, that happen in a listener’s brain while listening to real-life stories. But what is going on in the speaker’s brain? We asked the storyteller to go into the fMRI scanner and compared his brain responses while telling the story to the brain responses of the listeners listening to the story. Remember, producing speech and comprehending speech are two very different processes. But to our surprise, we saw the brain responses in the listeners’ brains while listening to the story were actually coupled to and similar to the brain responses we observed in the speaker’s brain while telling the story (see below).

What’s more, we found the better the listener’s understanding of the speaker’s story, the stronger the similarity between the listener’s brain and the speaker’s brain. In other words, when speaker and listener really understood each other, their brain responses became coupled and were very similar.

Which led my researchers and I to ask: How can we use such speaker-listener brain coupling to transmit a memory of an experience from one person’s brain to another? So we did the following experiment. We had people watch — for the first time ever — the pilot episode of the BBC TV series Sherlock while we scanned their brains. Later, we asked them to return to the scanner and relate the episode as a story to a person who had never seen it. This experimental design allowed us to trace how the experiences encoded in the viewers’ brains while watching the episode were later recalled from memory and shared with the brain of a listener who hadn’t seen it.

In the episode, Sherlock entered a cab that turned out to be driven by the murderer he had been looking for. As our lab subjects watched this scene, we observed a specific neural pattern emerge in their high-order brain areas. We were really surprised to see that when this scene was recalled and told using spoken language from a viewer to a non-viewer, the same neural patterns emerged in the same high-order areas in the non-viewer’s brain. So neural entrainment can occur even when we are sharing only our memories — not even a real experience — with another person. This study highlights the essential role our common language plays in the process of transmitting our memories to other brains.

Human communication, however, is far from perfect, and in many cases, we fail to communicate our thoughts, or we’re misunderstood. Indeed, sometimes, people may interpret the exact same story in different ways. To understand what happens in such cases, we conducted another experiment. We used the J.D. Salinger story “Pretty Mouth and Green My Eyes,” in which a husband loses track of his wife in the middle of a party. The man ends up calling his best friend, asking, “Did you see my wife?” We told half of the subjects in our study that the wife was having an affair with the best friend; with the other half, we said the wife was loyal and the husband was very jealous.

Interestingly, this one sentence that we told subjects before the story started was enough to make the brain responses of all the people who believed the wife was having an affair to be very similar in the same high-order areas that represent the narrative of the story and to be different from the group who thought her husband was unjustifiably jealous.

This finding has implications beyond our lab experiment. If one sentence was enough to make a person’s brain similar to people who had the same belief (in this case, the wife is unfaithful) and different from people who held a different belief the husband is paranoid), imagine how this effect might be amplified in real life. As our experiments show, good communication depends on speakers and listeners possessing common ground. Today, too many of us live in echo chambers where we’re exposed to the same perspective day after day. We should all be concerned as a society if we lose our common ground and lose the ability to communicate effectively and share our views with people who are different than us.

As a scientist, I’m not sure how to fix this. One starting point might be to go back to having dialogues with each other, where we take turns speaking and listening. Together we hash out ideas and try to come to a mutual understanding. Such conversations, as our experiments show, may result in coupling our brains to other brains, and the people we’re coupled to define who we are — think how you much you change on a daily basis from your interactions and your coupling with the people you encounter. I think the most important thing is just to keep being coupled to other people, to keep communicating with them and to keep spreading ideas. Because the sum of all of us together, coupled, is far greater than the sum of our parts.

Illustrations courtesy of Uri Hasson